The Critical Window for AI Supply Chain Security

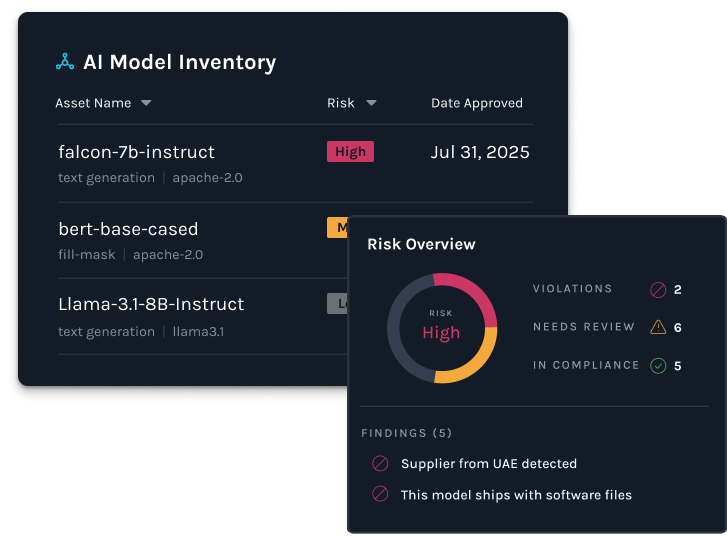

CISOs are being asked to guarantee the security of AI systems they can't see into. CTOs are deploying models without knowing their provenance. CEOs are making strategic bets on AI while flying blind on fundamental risks. Today's launch of Manifest AI Risk addresses these visibility gaps. The more pressing question isn't what we've built, it's why organizations can no longer afford to operate without AI transparency.

The AI Governance Crisis Hiding in Plain Sight

While the cybersecurity industry debates AI ethics and compliance frameworks, a more immediate crisis is unfolding in enterprise technology stacks. Organizations are deploying dozens, sometimes hundreds, of AI models without basic visibility into their supply chains. We're witnessing the same supply chain blindness that made SolarWinds and Log4shell so devastating, except now it's happening in AI.

Consider this: 16 different large language models confidently suggest software components that don't exist. Malicious actors exploit this by registering packages under these hallucinated names to distribute malware. Meanwhile, only 20% of organizations utilize software bills of materials (SBOMs) to track components, and existing tools often overlook the AI-specific risks that matter most.

The DeepSeek data breach in January, which exposed over a million sensitive records, wasn't just another security incident; it was a preview of what happens when organizations adopt AI models from untrusted sources without proper governance. Several countries have banned DeepSeek on government devices, but how many enterprises are still running it in production environments without being aware of the risks?

Why Traditional Security Approaches Fall Short

Traditional cybersecurity tools do not treat AI with the same level of review and diligence as conventional software. AI is not just another software component, which means that current tools overlook the unique risks that distinguish AI systems. AI models aren't just code—they're learned intelligence that can be poisoned, backdoored, or manipulated in ways that conventional vulnerability scanners may not detect.

When the Transformers library vulnerability (CVE-2024-11393) was discovered in late 2024, it affected millions of AI deployments worldwide. However, here's the critical insight: this wasn't an AI model vulnerability; it was a software dependency that came bundled with AI models. Organizations had no way to quickly identify which of their AI systems were affected because they lacked AI-specific bills of materials.

Our research shows that security teams spend six to eight weeks manually vetting a single AI model - reading research papers, checking datasets, and verifying licenses. For organizations deploying hundreds of models, this creates an impossible choice: accept unknown risks or grind AI innovation to a halt.

The Regulatory Tsunami That Changes Everything

The regulatory landscape isn't just evolving. It's accelerating at a pace that will catch most organizations unprepared. The EU AI Act demands transparency in high-risk AI systems. The proposed FY2026 NDAA includes novel requirements for AI-specific software bills of materials. California's AB2013 requires detailed disclosure of training data by January 2026.

These are not merely compliance requirements; they serve as competitive advantages for organizations that proactively address them. The companies building AI transparency capabilities today will have strategic advantages when regulations become mandatory tomorrow.

The pattern is evident from our government work. Organizations that implemented SBOM management early gained operational advantages that extend far beyond compliance. They can respond to zero-day vulnerabilities in hours instead of weeks, make procurement decisions faster, and reduce vendor dependence. Now, the same opportunity exists for AI transparency.

The Five Critical AI Supply Chain Gaps

Our analysis reveals five critical gaps that traditional security tools can't address:

AI Model Provenance: Unlike software code, AI models are "black boxes" containing parameters learned during training. Organizations need visibility into model origins, training data sources, and potential tampering capabilities that are not present in traditional supply chain security.

AI-Generated Code Risks: As AI coding assistants become ubiquitous, they're introducing new attack vectors through hallucinated dependencies and insecure code patterns. Organizations need specialized tools to validate AI-generated components.

API Integration Security: Most AI adoption happens through API integrations with third-party services. These create new dependency chains that existing tools don't track or secure.

Regulatory Compliance: Emerging AI regulations require specific transparency and governance capabilities that traditional compliance tools weren't designed to handle.

Real-Time Risk Assessment: AI models can be updated or exhibit varying behavior over time. Organizations need continuous monitoring capabilities that detect drift, tampering, or changes in unexpected behavior.

The Closing Window

The governance requirements for AI are looming faster, and the risk is growing rapidly. Early adopters are already gaining competitive advantages through AI transparency, while organizations stuck in manual governance processes are falling behind. The companies that establish AI supply chain visibility today will dominate tomorrow's AI-driven markets.

At its core, this isn't about achieving perfect security, it's about enabling informed risk management. Organizations must know what AI models they're running, where they're deployed, and what happens when something goes wrong. They require automotive recall-level precision for AI incidents: instant visibility, precise impact assessment, and automated response.

AI transparency will become essential - that's inevitable. What remains uncertain is whether your organization will lead this transformation or be forced to follow it. Early movers who establish AI supply chain visibility today will dominate tomorrow's AI-driven markets.

We've reached the inflection point. Will you be ready?

Regulations for AI governance are making headlines. Policy Framework for Safe Adoption of Open-Weight AI Models and Datasets provides a practical guide to help enterprises safely adopt open-source AI models without getting burned by hidden risks. It covers six critical areas—from avoiding models from sanctioned countries to checking for software vulnerabilities— to help organizations navigate AI adoption while maintaining security and regulatory compliance.

Learn about Manifest AI Risk, designed to help security and compliance teams secure their AI supply chains: http://www.manifestcyber.com/ai-risk.