Build and Deploy AI, Responsibly

As AI adoption accelerates, so do the risks, ranging from outdated models and opaque training data to non-compliant licenses and geopolitical concerns. Manifest AI Risk helps your teams understand and control the real-world risks of AI, from development to deployment.

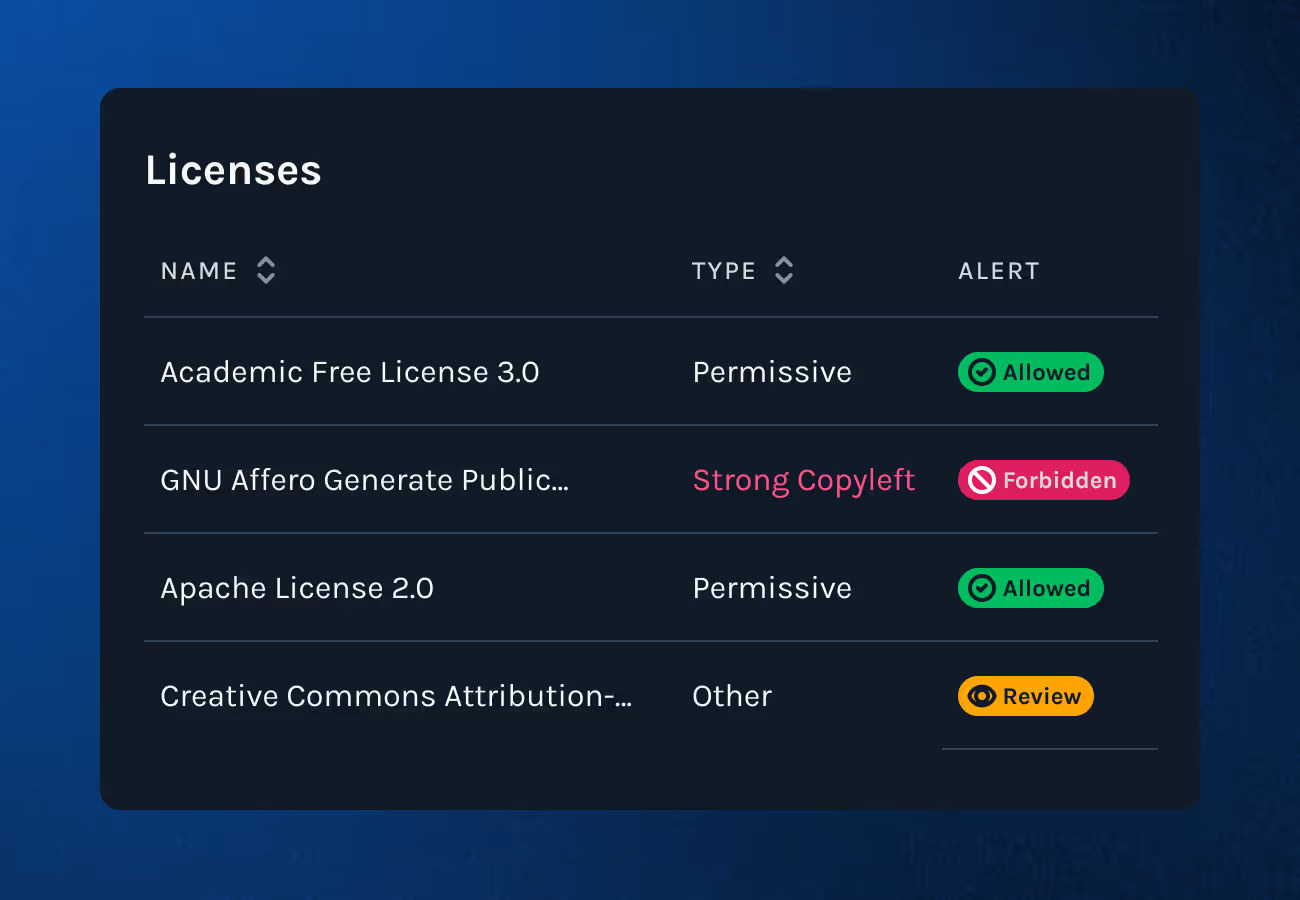

Use of non-compliant or high-risk licenses

Opaque or undocumented training datasets

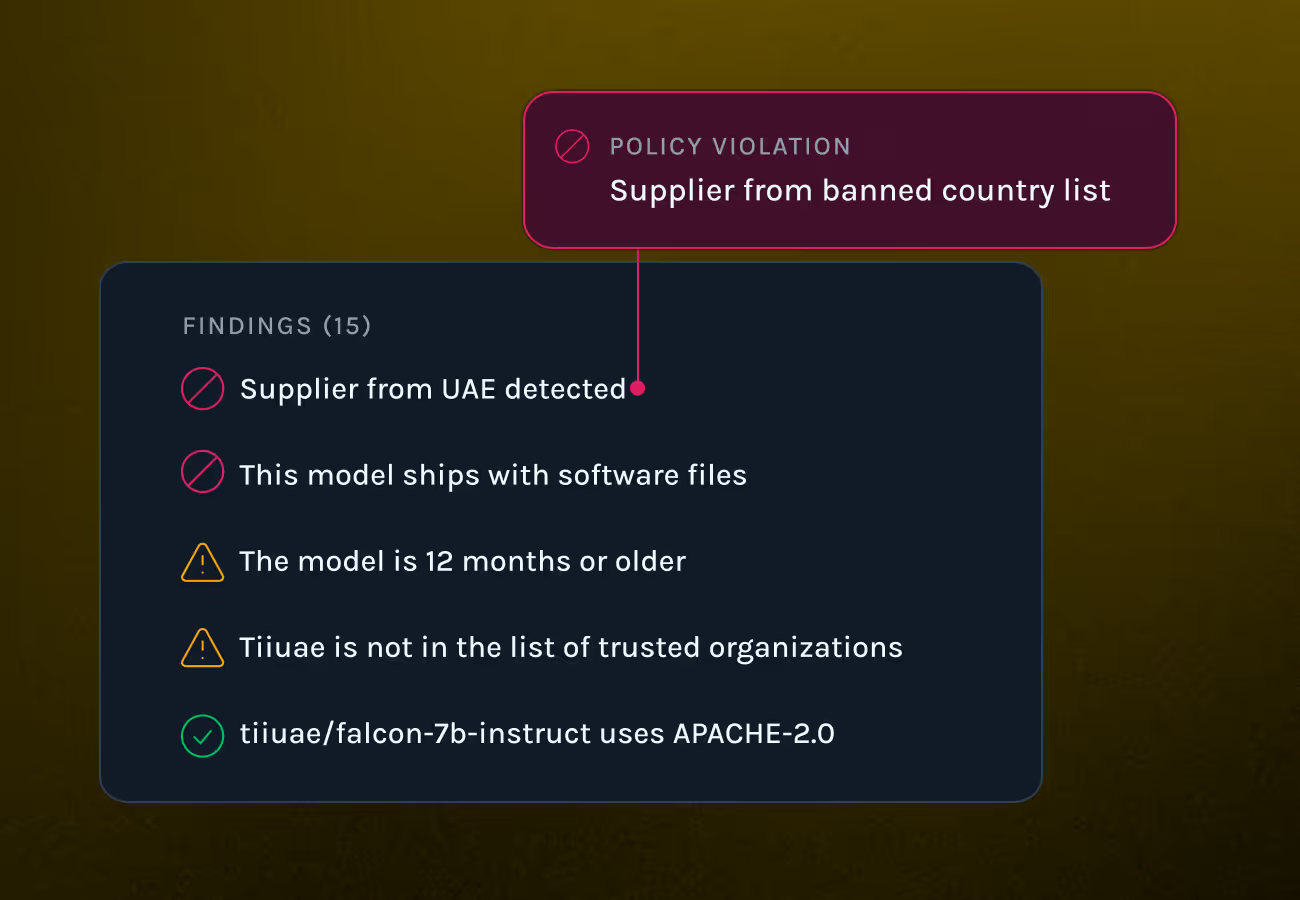

Models sourced from untrusted or flagged regions

Outdated or unmaintained AI components

Shadow AI drifting through development cycles

Security risks in third-party model dependencies

.avif)

What you can do with Manifest AI Risk

Define and enforce policies that align with your organization's standards for responsible AI use. Flag or restrict components that:

Are unmaintained or outdated

Originate from high-risk countries

Use non-compliant or restricted AI licenses

Lack transparency in their training date

Evaluate popular open-weight models from sources like Hugging Face for AI security risks, licensing concerns, and training data opacity. Ensure third-party models meet your internal thresholds before use.

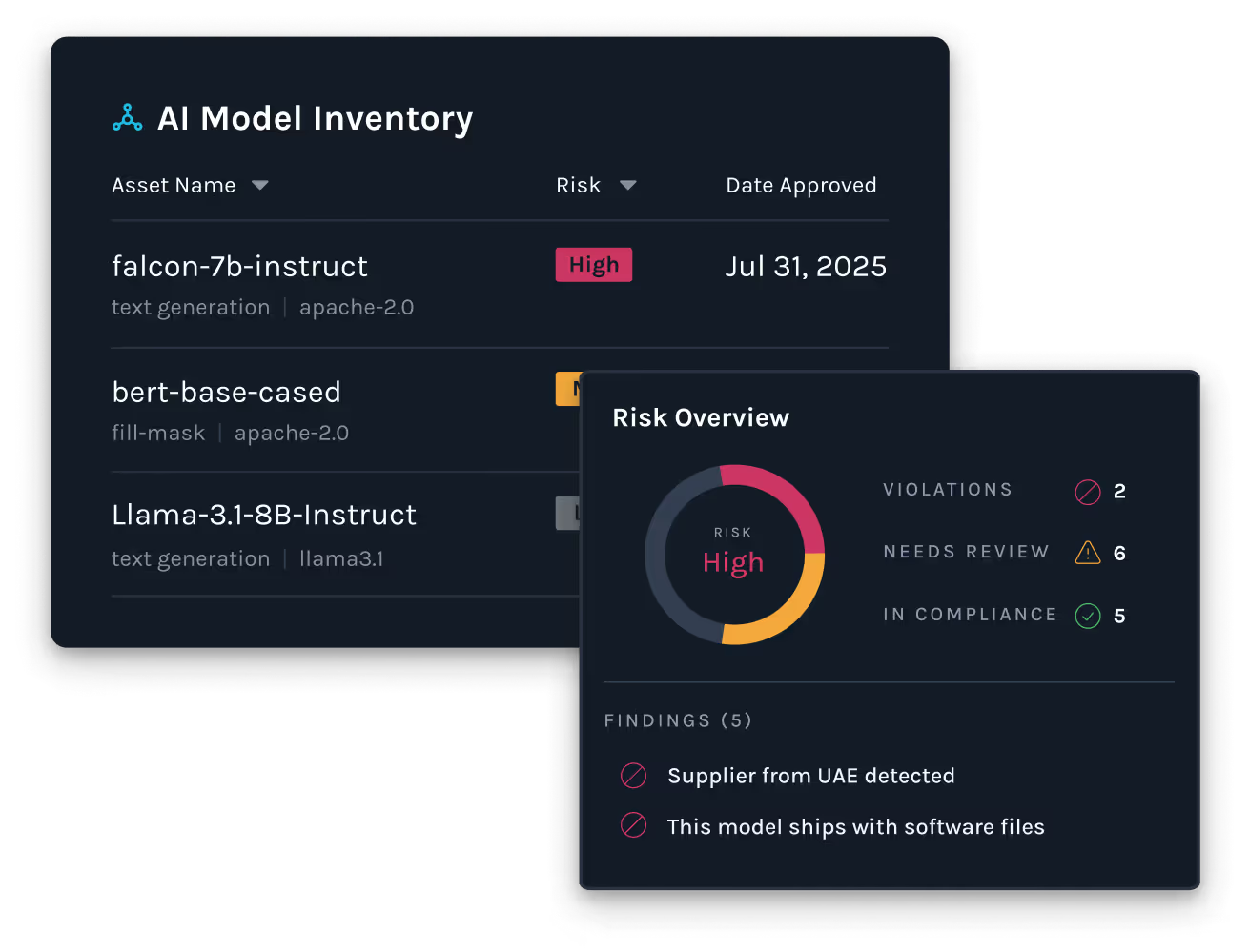

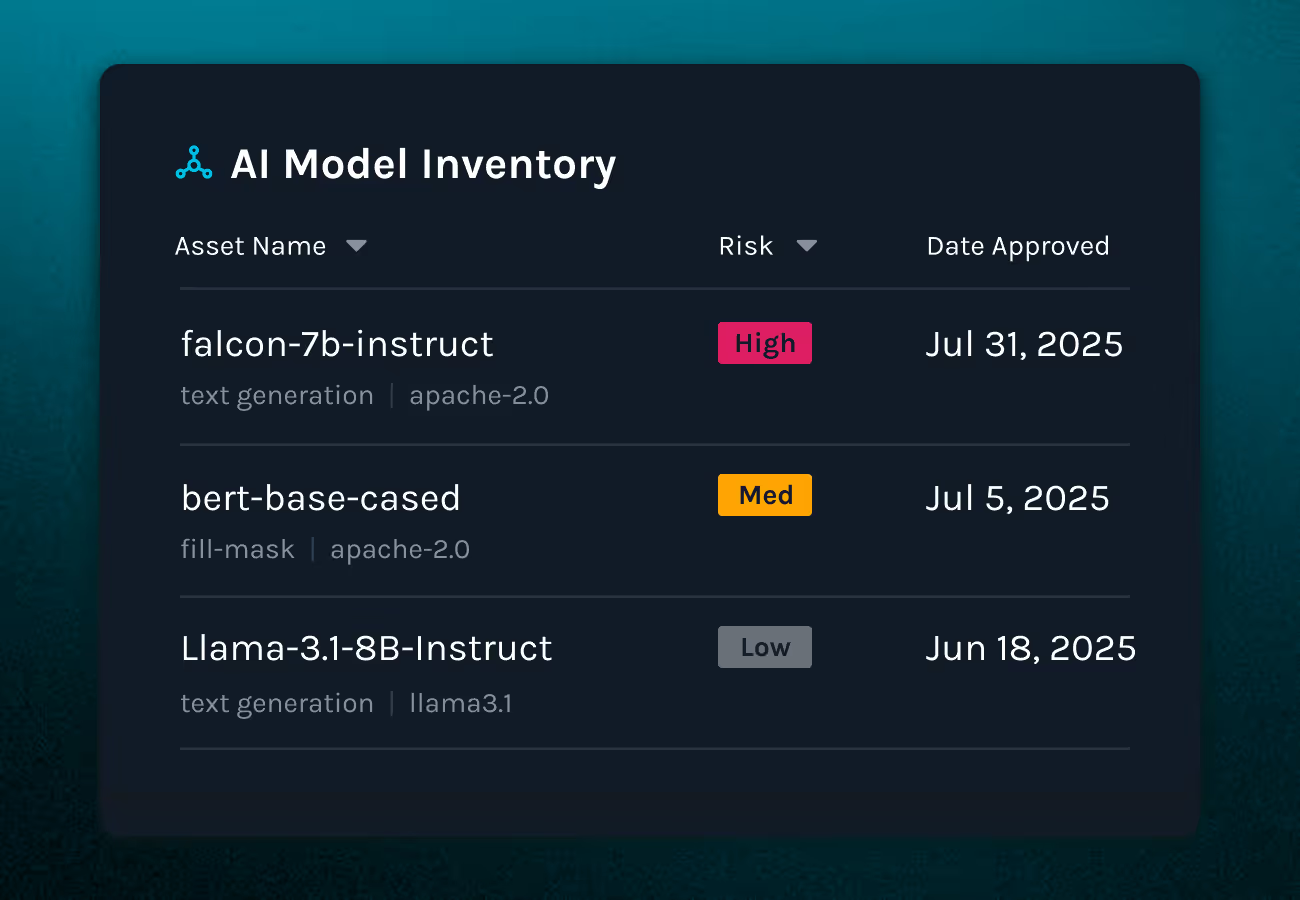

Track every AI model, whether approved, in development, or under review, in a centralized dashboard. Quickly understand model status, usage, and associated risks.

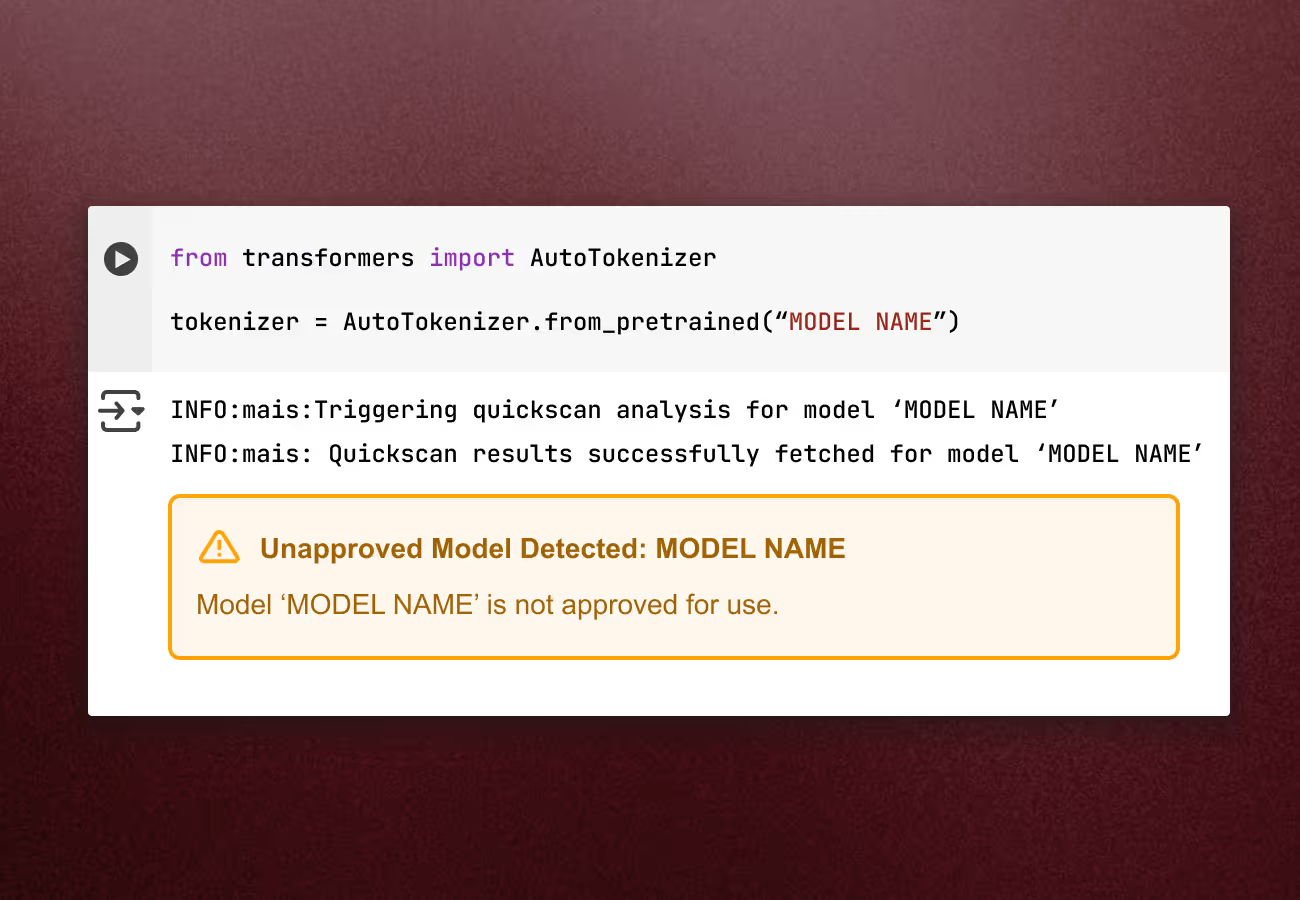

Prevent risky model use during development with our Python plugin, which automatically detects AI governance policy violations in real time and alerts developers to take early action. Complementing this, our CLI-based Source Code Scanner identifies when open-source or proprietary repositories contain embedded AI models.

FAQs

AI risk refers to the potential negative outcomes that can result from using artificial intelligence systems, including security vulnerabilities, legal non-compliance, data privacy issues, and model misuse. Managing these risks is essential for responsible AI deployment.

Key AI risks include:

- Use of unlicensed or non-compliant models

- Security vulnerabilities in open-source models

- Undocumented or biased training data

- Integration of outdated or abandoned models

- Regulatory non-compliance

Manifest AI Risk is an AI risk management platform that provides full visibility into the AI models, datasets, licenses, and dependencies used in your software. It enables continuous AI risk assessment, governance policy enforcement, and secure, compliant AI development.

Manifest AI Risk continuously scans open-source and custom AI models for risks related to licensing, data transparency, country of origin, and maintenance status. It detects violations of governance policies and helps teams respond proactively.

Manifest AI Risk is available through the Manifest Platform or standalone.