Why the CISA Tiger Team Wrote the First AIBOM Use Case Guide

Over the last year, I’ve had the privilege of helping kick off and co-lead CISA’s AI SBOM (AIBOM) Tiger Team — a working group focused on one simple but critical question:

What does software supply chain transparency look like when AI is involved?

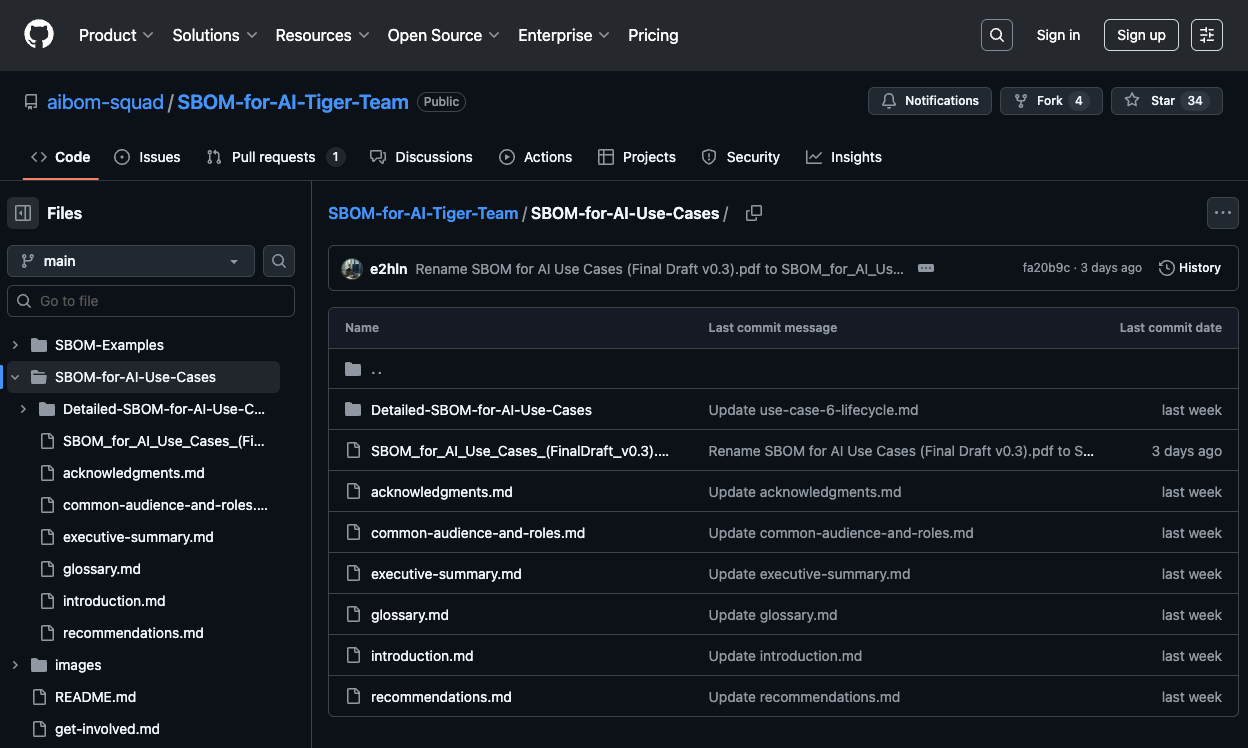

This week, I’m excited to share the first public output from that effort: The AI SBOM Tiger Team Use Case Guide.

This document is our first swing at articulating why the “bill of materials” (BOM) concept is so essential for AI, what risks it helps address, and what kinds of use cases we should all be thinking about as we build out AI governance and security processes.

Here’s why we wrote it, and why I think it matters.

First, a Bit of Background

When CISA wrapped up the initial round of SBOM Tiger Teams, Allan Friedman and the CISA team were looking to seed a new wave of working groups to tackle emerging challenges. I kept pushing hard for an AI-focused one — and eventually teamed up with Helen and Dmitry to co-lead it.

We’ve had participation from across sectors: government, vendors, and private industry. From the start, our group aligned on a core goal:

Define clear, practical use cases that explain why BOMs are relevant for AI.

Before we got into the technical weeds, like which new AI-specific fields should be added to SPDX or CycloneDX, we needed to create a resource that would help security practitioners and leadership understand why AI SBOMs matter in the first place.

That’s what this guide is meant to do.

The Why Behind AI SBOMs

The idea is pretty straightforward: AI is still software.

For most risk categories, whether business, security, or legal, organizations already have playbooks and controls for managing traditional software. But they haven’t always extended those same practices to AI.

That needs to change.

Think about it: with AI systems, we need to understand what open-source models and libraries are in use. We need to track datasets, assess legal licenses, scan for vulnerabilities, and monitor where models are deployed.

We also need to be able to:

- Understand the risk of open-weight models and public datasets

- Respond to incidents quickly (What was in that model? Where is it used?)

- Do continuous monitoring (Is this model still behaving as expected? How do I respond to future AI incidents or vulnerabilities?)

- Generate compliance documentation with a couple of clicks — not through weeks of manual effort.

In other words, we need traceability, transparency, and automation. That’s what SBOMs gave us in traditional software. And it’s what AI SBOMs can give us in this new world.

Why Use Cases First?

Our hypothesis was simple:

If AI SBOMs are going to take off, it’s not just about ideas. It’s about tooling.

When traditional SBOMs began to scale, it wasn’t because people fell in love with the concept. It was because tools made it easy: easy to generate SBOMs, analyze them, and integrate them into existing workflows.

The same needs to happen for AI. But before we even get to that tooling layer, we need alignment on what the real-world problems are that AI SBOMs can help solve.

So that’s what this guide is focused on: making the case through pragmatic, security-grounded use cases — so that technical standards, tools, and policy can build from there.

Where This Is Going

You’ll see that the guide calls out needs like automation, integration into existing processes, and supporting downstream efforts like risk assessment, licensing, and compliance.

I won’t claim we solved everything, we didn’t.

But this document is meant to be a launching pad to guide future work on:

- Technical standards (SPDX/CycloneDX extensions)

- Tooling development

- More robust frameworks for AI governance

If You’re Just Getting Started with AI Governance…

Here’s my advice: Start by realizing that AI is just another form of software.

You don’t need to reinvent the wheel. You probably already have systems for:

- Tracking open-source dependencies

- Scanning for vulnerabilities

- Managing licenses

- Doing incident response

- Monitoring production systems

You just need to make sure those systems can now handle models, datasets, and AI pipelines.

And SBOMs, or more precisely, AI SBOMs, are a powerful way to do that. They give you visibility, repeatability, and the ability to reason about risk at scale.

Why This Work Matters to Manifest

At Manifest, we believe that the future of secure software supply chains depends on more than just tracking code. The AI era introduces new challenges; data lineage, model provenance, licensing, model vulnerabilities, that demand a new kind of tooling and governance.

That’s why we’re proud to have contributed to this guide and the community shaping it. And it’s why we’re building tools that help teams generate, manage, and act on AI BOMs just as easily as they do for traditional SBOMs.

We’re just getting started, and we’re excited to help operationalize these ideas in real organizations — from compliance to incident response to continuous assurance.

Read the guide.

Get in touch if you're building your own AI governance strategy.

.png)