A Technical Roadmap for Security Leaders

During my time as Chief of Technology Strategy at CISA, I led and collaborated on efforts like Secure by Design and Software Bills of Material (SBOMs) that promoted transparency and security in software. But with AI, we’re staring down an explosively innovative technology that is effectively a black box to a vast majority of executives, security practitioners, and governance and compliance leaders.

But despite AI’s opaque, black box nature, organizations around the world are adopting AI at breakneck speed. A major IBM survey of 3,000 CEOs across 30 countries found that 61% are accelerating generative AI adoption, making LLMs a C-suite priority.

This disconnect between AI adoption and AI security and transparency is creating real operational problems. Development teams are moving quickly, business units are experimenting with new models, and security teams are operating in the dark. Most security leaders I talk to can’t answer basic questions about AI models:

- How can you assess risk or trust in open-weight models?

- Where did the datasets come from, and do they pose legal risk?

- How do you keep track of custom models, tuned and trained in-house?

- Which models are running in production and in what software?

- How do you ensure that engineers are only using approved and low-risk models and datasets?

- Given a dataset, can you identify all models that were trained on the dataset?

- Given a model, can you identify all of the datasets it was trained on?

Early AI security efforts treated AI like a completely separate domain from software; it required different tools, different workflows, and different methodologies.

But this misses the fundamental truth: AI is a subset of software. Therefore, it follows that most processes and approaches to secure software also need to be applied to AI.

Start with the Basics

AI is not an alien technology; it’s software. Therefore, many of the approaches that work for securing software also apply to AI. The first step is to define and automate how you assess risk in AI models.

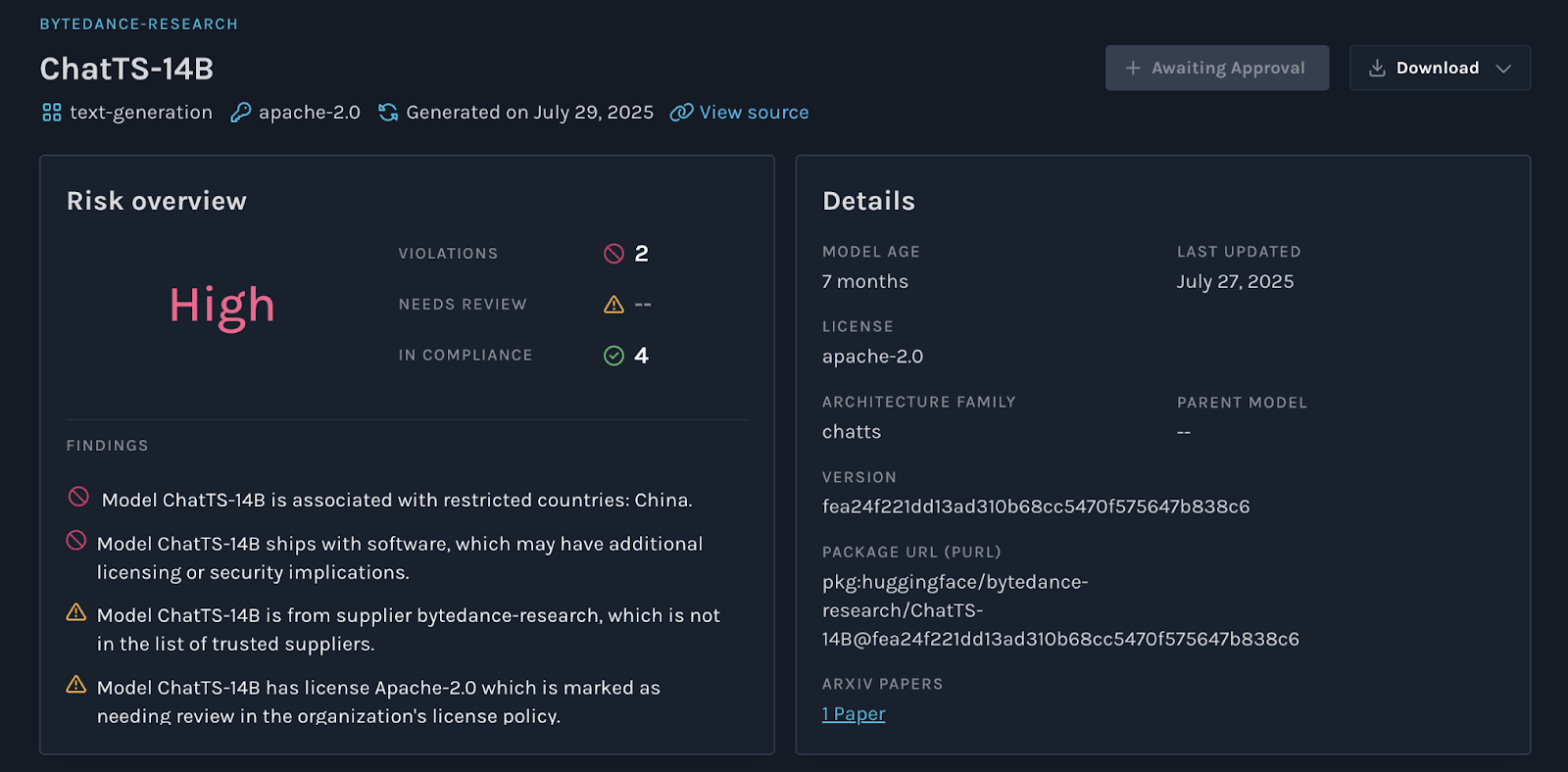

Security teams need standardized criteria to evaluate AI systems. For open-weight models, this means vetting their provenance, checking for known vulnerabilities, and assessing whether their licenses allow for intended use. For closed models, ask vendors to provide transparency artifacts and attestations. Just as software supply chain security starts with knowing the components you rely on, AI security begins with understanding your models. These processes shouldn’t be that dissimilar from how organizations currently evaluate open-source software and third-party vendor products.

Key steps to operationalize AI risk assessment:

- Establish criteria for AI model evaluation (e.g., provenance, licensing, vulnerability exposure, adversarial robustness).

- Score and categorize models just as you would with open-source software or third-party vendors.

- Automate assessments using tools that analyze open-source model weights and generate risk reports, similar to how SCA tools scan for vulnerable packages.

Example: An enterprise using an open-weight LLM from Hugging Face can automatically flag outdated models, known vulnerabilities, or non-compliant licenses, before those risks land in production.

Open the Black Box

The first rule of software supply chain security still applies: you can’t secure what you don’t know about. For AI, that means building a comprehensive inventory of all models in use, including open-source, vendor-supplied, and custom-trained models. Without this visibility, policies and controls have nothing to attach to.

An AI inventory should capture:

- Model identity: name, version, provider, and ownership

- Deployment details: where it runs (apps, services, environments)

- Lineage: from base model to fine-tuned derivatives

- Associated datasets: sources, licensing, sensitivity

This inventory, however, only scratches the surface. To move beyond a simple list, organizations need to open the black box of AI with AI Bills of Materials (AIBOMs).

Just as SBOMs give you a map of software components, an AIBOM reveals what’s inside an AI model. It provides:

- Model lineage metadata – full history of base and fine-tuned versions

- Dataset transparency – origin, licensing, and compliance risks

- Dependencies – frameworks (e.g., PyTorch, TensorFlow), hardware, and supporting software

- Risk and compliance metadata – security issues, bias findings, and legal exposure

AIBOMs can help identify software and service dependencies upstream when a model is compromised or if a dataset is later flagged as non-compliant. In short, AIBOMs turn an opaque, ungoverned asset into something you can monitor, audit, and control, making it possible to secure AI at scale.

Get Ahead of Shadow AI

When cloud computing took off more than a decade ago, shadow IT was a big problem that security teams eventually found solutions for. Now, we face a similar threat vector in shadow AI. Developers and business units experiment with AI tools outside of official oversight, leading to untracked models and data flows.

To counter this, organizations must:

- Identify AI usage in source code and deployments

- Scan for unapproved models and model risk in software pipelines (like python notebooks)

- Require artifact generation (AIBOMs, risk reports) for custom-trained models

By doing so, you can regain visibility and control over where and how AI is being used across your enterprise.

Automate and Integrate

Finally, AI governance must be automated and integrated into existing security workflows. AI doesn’t live in a vacuum; it’s part of your broader software ecosystem.

Tie your AI risk tracking into existing tools:

- Software Composition Analysis (SCA) tools

- Third-Party Risk Management (TPRM) workflows

- CI/CD pipelines

- SIEM/SOAR response workflows

This ensures AI security becomes a natural extension of the processes you already use to secure software supply chains.

The Path Forward

AI transparency is not a future problem; it’s a present imperative. Security leaders who build AI risk management into their existing software security programs will be ahead of the curve, enabling innovation without sacrificing security.

By starting with inventory, embracing AIBOMs, and integrating AI tracking into your workflows, you can open the black box of AI and make it a secure, compliant, and trustworthy part of your organization’s technology strategy.

The technology may be new, but the principles remain the same: you can’t secure what you can’t see. The sooner you make AI visible, the safer and more innovative your enterprise will be.